Selenium is a powerful and the most popular tool for automating web testing, but one common challenge that testers encounter that can disrupt the entire productivity is Flaky Tests. Flaky tests are a common challenge faced by software testers and developers during the SDLC. Flaky tests refer to tests that produce inconsistent outcomes, failing or passing unpredictably, without any changes to the code under testing. These tests can be time-consuming and frustrating as they cause decreased productivity and unpredictable test outcomes. In this article, we will explore strategies for accomplishing test stability and efficiently managing flaky Selenium testing.

How common are Flaky Tests?

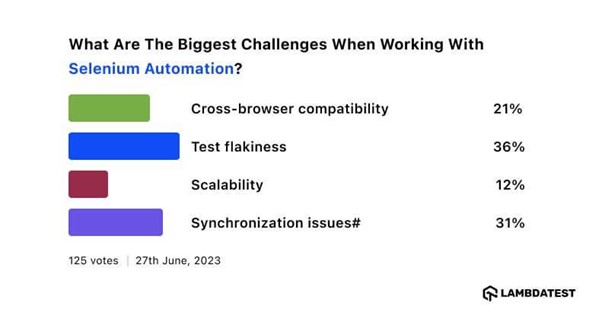

Unfortunately, flaky tests are more common than you think. One of the recent surveys of software developers found that 59 percent claimed to deal with flaky tests on a weekly, monthly, or daily basis. In actual fact, in one of our fresh social media poll, people voted tremendously for “test flakiness” as the most important challenge when working with Selenium Automation.

After collecting a huge sample of internal test outcomes over one month, Google released a presentation, The State of Continuous Integration Testing @Google, which exposed some interesting insights.

- 84 percent of the test transitions from Pass -> Fail were from flaky tests.

- Only 1.23 percent of testing ever found a breakage.

- Almost 16 percent of their 4.2 million testing disclosed some level of flakiness.

- Flaky failures often block and delay releases.

- Between 2–16 percent of their compute resources were spent on re-running flaky tests.

Google concluded that:

“Test systems should be able to manage a specific level of flakiness”

What causes a Flaky Selenium test suite?

The Selenium WebDriver language bindings are simply a thin wrapper around the W3C WebDriver Protocol utilized to automate browsers. Selenium WebDriver implements a series of REST APIs exposed by the W3C Webdriver Protocol to execute relevant browser actions such as typing input into fields, clicking components, starting the browser, etc., as per the specifications.

The most recent Selenium 4 introduces a major architectural upgrade by W3C standardization of the Webdriver API and complete deprecation of the JSON wire protocol. The W3C protocol itself is deterministic thus implying Selenium WebDriver is deterministic as well. Main browser drivers like Chromedriver and Geckodriver have also fully adopted W3C protocols. In a nutshell, with Selenium 4, cross-browser testing is more reliable and effective than ever!

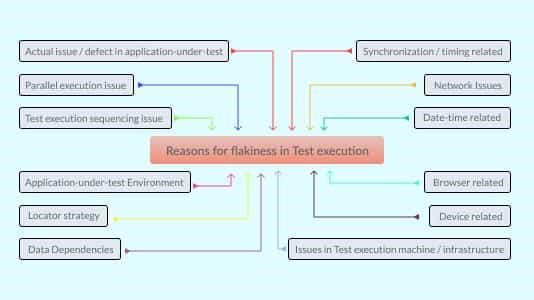

Therefore, from where does the test flakiness come into the equation?

Common causes of Flakiness in the Selenium Test suite

The following are the key issues causing a flaky test and corresponding solutions to attain build stability:

-

Flaky Testing Environment

The specific test environment is the number one key factor contributing to a flaky Selenium test suite. This comprises the nature of software, network speed, browser’s host machine, website’s servers, etc. The common issues are:

-

- Improper software versions used to execute tests.

- Instability of the AUT environment.

- Browser-related issues.

- Restrictions of the machine where the test runs like memory, processing speed, etc.

- Slow connection or unstable network connectivity.

Unfortunately, the randomness presented by these infrastructure challenges isn’t something we have complete control over. Projects are now switching to cloud-centric test environments like LambdaTest for a stabilized environ.

LambdaTest is a cloud-centric cross-browser test platform that supports Selenium Grid, offering a solution to every complication you face while performing automated testing using your local machine. This form of test Automation Platform proffers a Selenium Grid comprising 3000+ online browsers and OSs for you to run Selenium automation testing effortlessly. LambdaTest Selenium Automation platform allows you to run Selenium IDE tests on a secure and scalable Selenium infrastructure. Here’s a video of the online Selenium Grid provided by LambdaTest for executing automated tests.

2. Integration with Untrustworthy 3rd Party Tools and Apps

Test unpredictability increases with decreased control over your test environ. Flaky tests can occur when your test cases rely on unreliable 3rd-party APIs or functionality maintained by another QA team.

Common issues are:

-

- Third-party contract changes

- Third-party system errors

- Unreliable network connections

3. Lack of Synchronization

The web application encompasses multiple layers that interact. The way these layers interact with each other straight influences the app performance, including aspects like resource intensity, network speed, HTTP handling, source rendering, etc. So, if you are testing an application that gives asynchronous calls to other frames or layers, it is to be projected that the operations might necessitate varied timings, and you need to impose a delay in the form of waits for the call to complete successfully. If there is no synchronization between the automation test and the AUT, the test outcomes can be subjected to flakiness.

Common causes are:

-

- Asynchronous processing.

- Page loading takes time because of front-end processing, loading heavy elements, content, frames, etc.

- Delayed response from database or API.

- Not using correct “wait” strategies. The real delay time depends on multiple external factors, and the test script might or might not pass depending on whether the delay given in the code was enough.

4. Poorly written tests

Another commonly occurring and unsurprising reason is a poorly written test case. A bad test case often results in fluctuating outcomes.

Common issues are:

-

- The test depends on earlier tests.

- The test is large and covers a lot of logic.

- The test utilizes a fixed waiting time.

5. Data Dependencies

Test data issues also cause a test failure. But, they are simple to spot and fix.

Common causes are:

-

- Hard coding of data.

- No proper test data preparation.

- Using similar data in multiple tests might cause data corruption.

- Corruption of data is caused by other colleagues who are utilizing it and changing it.

6. Locator strategy

Another crucial reason resulting in flaky tests is the poor usage of unreliable locators.

Common issues are:

-

- Using hard-wired, lengthy, and weird XPath.

- Not taking care of dynamic components efficiently and using dynamic locators that vary based on the rendering of the Application under Test (AUT).

E.g. An absolute XPath imposes unwanted rigidity and is lengthy and error-prone.

Consider a scenario where you are automating tests for a login form on a site. You decide to utilize absolute XPath to find the “Username” input field. The absolute XPath for this input field may look something like this:

/html/body/div[1]/div[2]/form/div[1]/input

Now, imagine the structure of the webpage varies slightly due to a redesign or an update. Maybe the login form gets wrapped in an added div, or the form structure gets amended. As a result, the absolute XPath you have used becomes invalid, and your test script fails.

To fix these issues, you could instead utilize a relative XPath or other locator strategies that are less dependent on the web page’s absolute structure. For instance, a relative XPath targeting the “Username” input field may look like this:

//input[@id=’username’]

Relative XPaths are more resilient and flexible to modify in the webpage structure, making them a perfect choice for maintainable and robust automated test scripts.

7. Unintentional Invocation of Load Testing

Every so often, you introduce unnecessary intricacy to your test framework, resulting in unexpected outputs. One such example is allowing parallel execution. As the test suite grows bigger, dropping overall test implementation time through parallel testing makes sense. However, too many parallel test processes can increase test flakiness.

Other reasons

Apart from the listed causes, it is worth mentioning additional reasons:

-

- Incorrect configuration of modules used in the frameworks

- Utilizing incompatible tools for test apps. (Eg: For Angular apps, Protractor would be an ideal choice over Selenium) etc.

- Using deprecated modules

- Improper selection/usage of waits

Tips to decrease Flakiness in a Selenium test suite

- Stabilize your test environment

The unstable environment contributes to a flaky selenium test suite. One perfect solution to stabilize the test environment is to leverage cloud-based test platforms. The prime rewards of cloud-centric testing are:

-

- High-Performance

- Scalability

- Cost-effectiveness

- Faster test execution

- Customization

LambdaTest offers you a cloud-centric Selenium Grid, to run your Selenium automation scripts online allowing you to develop, test, and deliver speedy every time by overcoming infrastructure limitations. Besides, the grid is built on top of Selenium 4, bringing reliability and proficiency to the automation code.

If you are a tester or a developer and wish to upgrade your skills to the next level, this Selenium 101 certification from LambdaTest can help you reach that objective.

2. Synchronous Wait

According to Alan Richardson, the synchronization concern is the top reason for automation failure and notes that state-based synchronization is an ideal solution to making it function. Implementing synchronous waits is an effective strategy for reducing flakiness in a Selenium test suite. By using explicit waits rather than implicit ones, QA testers can wait for precise conditions to be met before proceeding with test implementation, confirming that the app is in the desired state. Customizing wait times based on app characteristics and load times is crucial to strike a balance between test reliability and effectiveness. Leveraging Selenium’s projected conditions and retry mechanisms further improves the robustness of the test suite, letting testers handle transient failures elegantly. Incorporating synchronous waits with the POM can also improve test code maintainability by encapsulating synchronization logic within page objects.

3. Usage of reliable Locators

Another crucial practice is the use of reliable locators. Selecting locators that are exceptional, descriptive, and unlikely to change. Anything that does not meet these criteria is a bad locator that will result in test failure.

The few best practices are:

-

- Always look for exceptional locators.

- Utilize static IDs added by developers.

- Robust IDs created by web development frameworks change on every page load, rendering them useless.

- If you are employed with XPath or CSS Selectors in Selenium, keep them short.

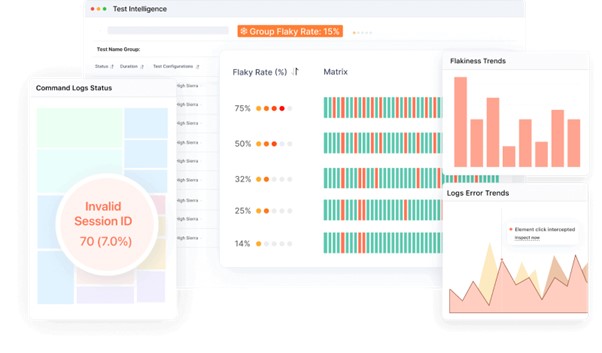

Leveraging LambdaTest’s AI-Powered Test Intelligence

LambdaTest’s Test Intelligence is perfectly designed to lessen flaky tests and improve test stability. Here are some key traits provided by AI-Powered Test Intelligence Platform like LambdaTest that can help in fixing flaky tests:

- Intelligent Flakiness Detection: Test Intelligence uses ML algorithms to scrutinize test implementation data and detect flaky tests, arranging efforts for resolution.

- Command Logs Error Trends Forecast:It also forecasts error trends by investigating command logs, and giving proactive recommendations to resolve or prevent issues affecting test reliability.

- Bug Classification of Log Trends:It scrutinizes test logs, classifies errors based on trends, and aids in identifying recurring issues contributing to flakiness.

- Anomalies in Test Implementation across Platforms:It detects anomalies in test implementation across platforms, assisting QA teams to prioritize efforts to enhance stability for particular environments.

By leveraging intelligent analysis and ML (machine learning), LambdaTest aims to improve the reliability and efficacy of automated testing, resulting in robust software delivery.

Conclusion

Flaky Selenium testing can be a major obstacle in the software development procedure, but armed with the right strategies and knowledge, they can be efficiently managed and reduced. By understanding the causes, executing detection methods, and adopting best practices for writing flakiness-resistant tests, testers and developers can ensure the accuracy and reliability of their test suites.